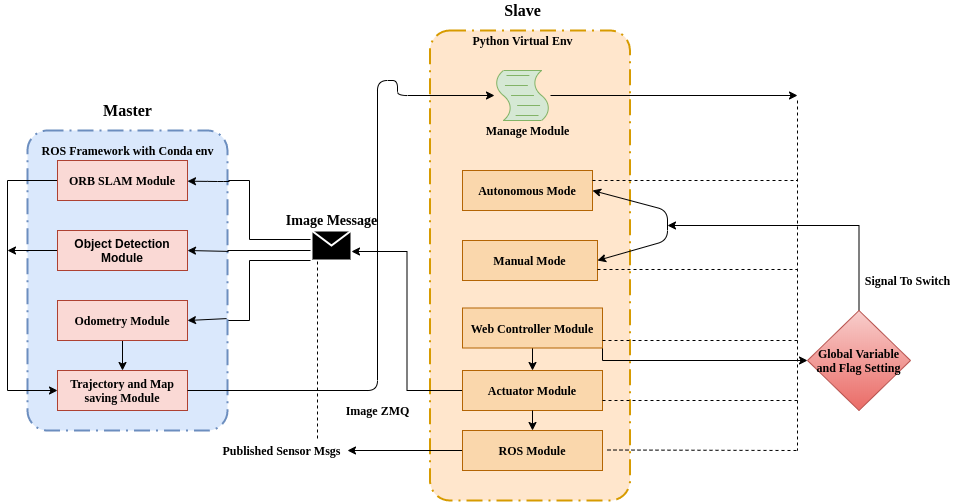

FlowChart¶

1. Master Slave Concept¶

- We use this technique to improve performance of Raspberry Pi.

Abstract

- Master is Laptop.

- Slave is a Robot.

Operation and Modules that run in each of the side is listed below

Master Node functionalities

Slave Node functionalities

2. Object Detection Module¶

- We used MobileNet SSD transfer learning algorithm for Object detection.Some pretrained objects can be recognised and the label is sent to slave after processing.

Reason

Single Shot object detection or SSD takes one single shot to sense multiple objects within the image.It comprises of two parts

- Extract feature maps

-

Apply convolution filter to detect objects

-

MobileNet is preferred over Resnet or VGG or alexnet as they have a huge network size and it escalates the number of computation however in Mobilenet there is a simple architecture comprising of a 3 × 3 depth wise convolution followed by a 1 × 1 pointwise convolution.

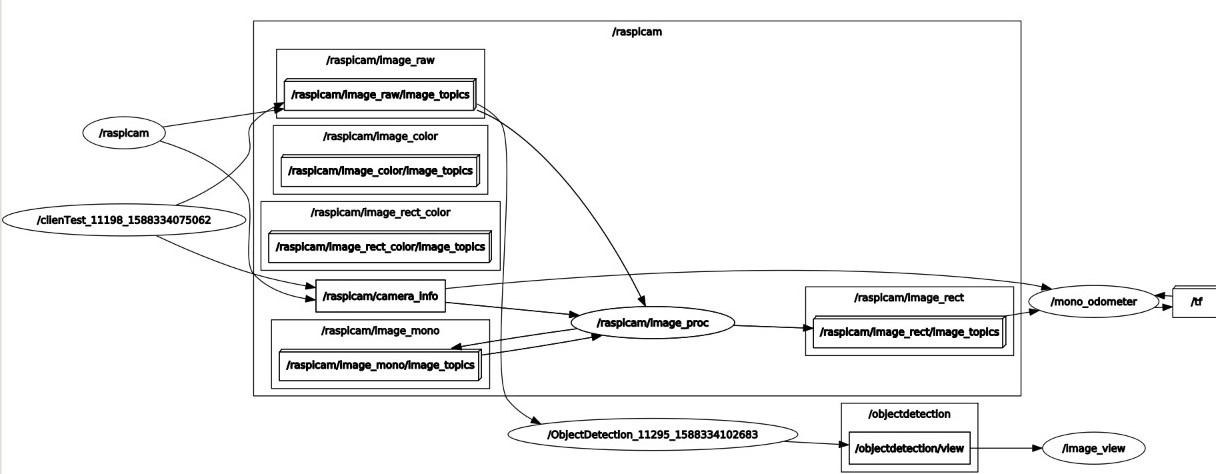

- After running all the nodes using

rosrun <package> <pythonfile>orroslaunchrun the below command to visualise the graph as shown below.

rqt graph

- All the labels are published by Objectdetection ros node.

- Using image pipeine package we can remap the topic through which the images are recieved from slave.

- For fast training of objects to recognise we have used Haar cascade detection.

- Positive and negative images collected using camera are used for train the model using Cascade GUI Trainer. It is a great software for training a model.

- An xml file generated after stage training can be used to detect those particular objects in realtime.

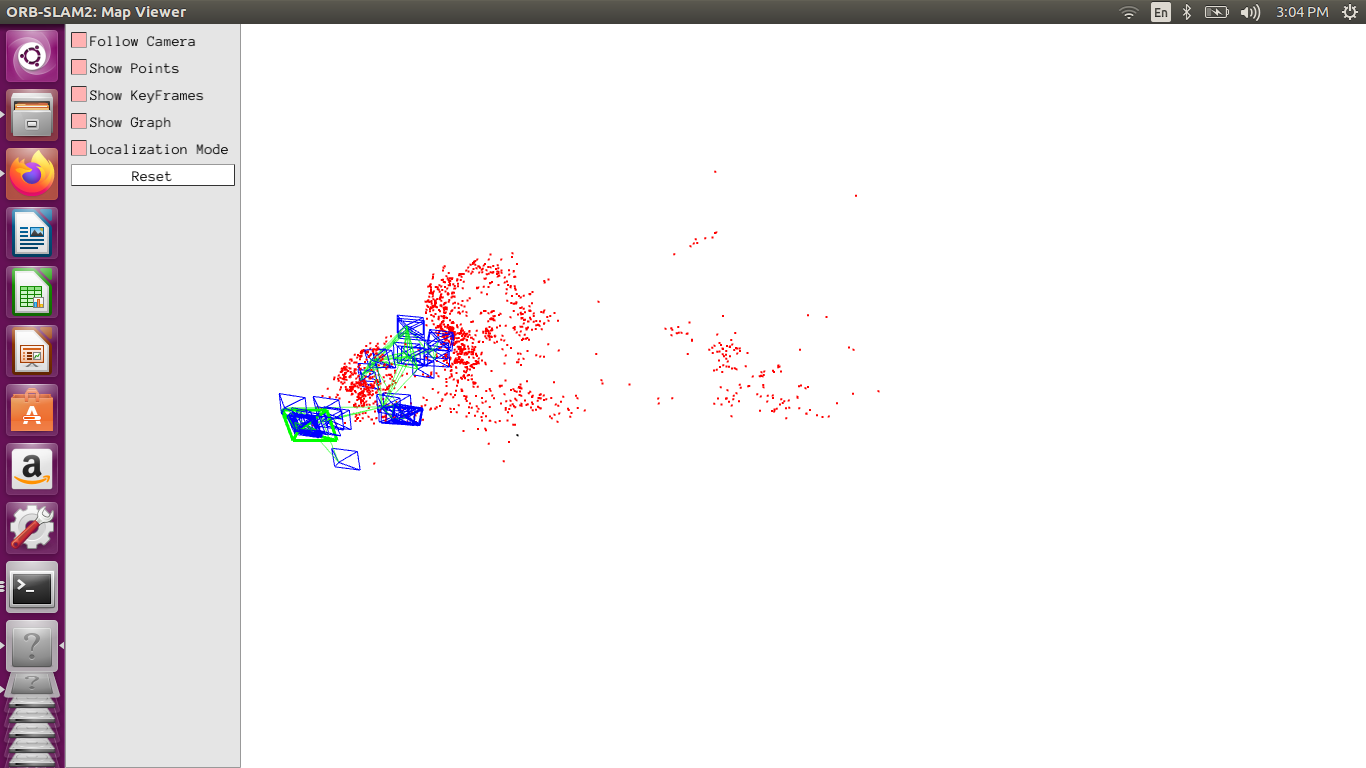

3. SLAM Module and VISO(Visual Odometry)¶

- Use this Link to know more about the Odometry package.

- Our aim was to use single camera for localistaion.Hence VISO gives accurate x,y,z coordinates of robot.

- With the help of ORB-SLAM-2 ,monocular camera , map construction is possible as shown above that helps in avoiding obstacles.

- Pangolian viewer is used for visualisation of SLAM.

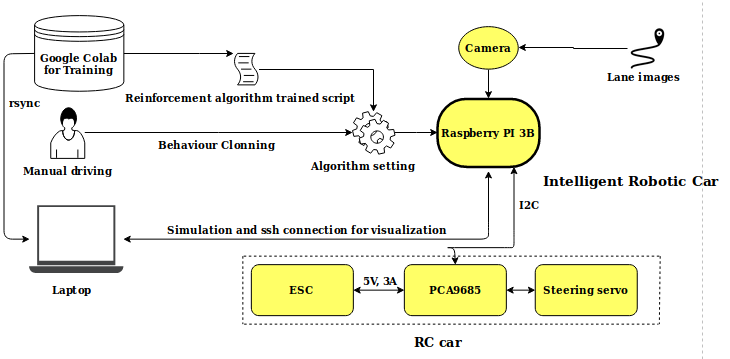

4. Autonomous Driving Module¶

Below is the Block Diagram

Expand each sections for more details

RC car

Radio controlled(RC) car consists of Electronic speed controller(ESC),PCA9685 throttle and steering control driver and Steering servo. PCA9685 driver will be interfaced with raspberry pi using I2C serial communication protocol.Based on the algorithm setting and the corresponding output (steering and throttle) from the raspberry pi is given to PCA9685 which controls steering angle of front wheels and throttle of the RC car.

Raspberry pi 3b

A Microprocessor which output digital pulses or signals from its GPIO(General purpose Input output) pins based on the algorithm setting. Raspberry pi camera is also interfaced with this processor for image processing. The GUI (Graphical user interface) or basic terminal of raspbian os installed on raspberry pi can be viewed on a computer using ssh(Secure shell) connection with it.